Introduction

The video cards are hardware responsible for the generation of images that are displayed on the screen of the computer (including video games, smartphones, etc.). There is so much variety of cards with so many distinct features that it is essential know at least the main features of these devices and understand a little bit about your operation. So, you know choose the model best suited to your needs. For this reason, the AbbreviationFinder shows the following concepts related to video cards, starting with the GPU after going to memory GDDR, 3D, shaders, stream processors, etc.

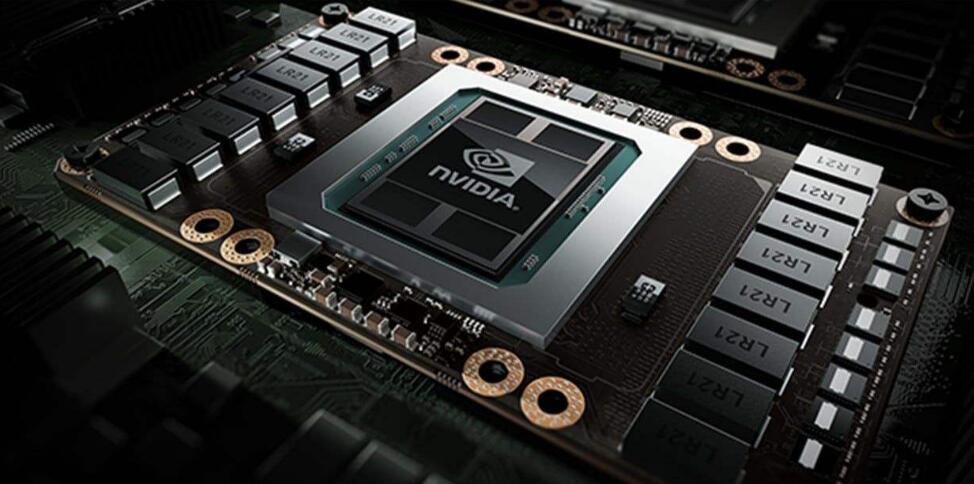

What is the GPU?

The GPU (Graphics Processing Unit – Processing Unit Chart), also called chip chart, it is certainly the most important component of a video card. This is, in few words, a type of processor in charge by the execution of calculations and routines that result in the images displayed on the video monitor of the computer.

As with the CPUs, there are a wide variety of GPUs available on the market, some the most powerful, developed especially for processing of complex 3D graphics (for running games or production movies, for example), even in the most simple, built with a focus in the market for low-cost computers. There are several manufacturers GPU on the market, but the companies most well known are NVIDIA, AMD (formerly ATI) and Intel being that the first two are the most popular with regard to chips graphics more sophisticated.

You can probably argue that they have seen the plates of video of other brands, like Gigabyte, Asus, Zotac, XFX, between other. Note, however, that these companies manufacture the plates, but don’t produce GPUs. It is up to them to insert GPUs in their cards as well as other resources, such as memory and connectors (subjects that will also be covered in this article). On the other hand, it is important to note also that GPUs can be built directly on motherboards of computers, being popularly called “onboard video card” in this case.

Characteristics of a GPU

The GPU came to “relieve” the main processor of the computer (CPU) of the heavy task of generating images. Therefore, it is able to handle with a large volume of mathematical calculations and geometric condition trivial for the processing of 3D images (used in games, exams, computerized physician, among others).

So that images can be generated, the GPU works by running a sequence of steps, which involve elaboration of elements geometric, color application, insertion of effects, and so forth. This sequence, quite summarized, consists in the receipt by the GPU of a set of vertices (the meeting point of two sides of an angle); in processing of this information so that they get the context of geometric; in the application of effects, colors and the like; and in the transformation this all in elements formed by the pixels (a pixel is a point that represents the smallest part of an image), a process known as rasterization. The next step is the submission of information to the video memory (frame buffer) so that the final content can be displayed on the screen.

The GPUs can rely on several resources for the implementation these steps, among them:

– Pixel Shader:a shader is a set of instructions used for the effects processing of rendering images. Pixel Shader, therefore, it is a program that works with the generation of effects-based pixels. This feature it is widely used in 3D images (games, for example) to generate effects of illumination, reflection, shading, etc.;

– Vertex Shader: similar to the Pixel Shader, only that it works with vertices instead of pixels. Thus, Vertex Shader consists of in a program that works with structures formed by the vertices, dealing, therefore, as geometric figures. This feature is used for the modeling of the objects to be displayed;

– Render Output Unit (ROP): basically, manipulates the data stored in the video memory so that they “become” in the set of pixels that form the images to be displayed on the screen. It is up to these units the application of filters, effects depth, among others;

– Texture Mapping Unit (TMU): this is a type of component able to rotate and resize bitmaps (basically, images are formed for sets of pixels) to apply a texture under a surface.

These resources are used by the GPUs in components whose quantities vary from model to model. You have seen above, for example, there are units for Vertex Shader units and Pixel Shaders. The principle and depending on the application, this scheme shows to be advantageous. However, there may be situations where units of one or the other are missing, creating an imbalance that undermines the performance. To cope with this, various graphics chips more current use stream processors, that is, units who can assume both the function of vertex Shaders as Pixel Shaders, according to the need of the application.

In general, it is possible to know the details that describe the use these and other resources on the GPU of your video card in the manual of this or on the website of the manufacturer. It is also possible to make use of programs that provide such information, such as the free GPU-Z, for Windows:

Clock of the GPU

If you look at the picture of the program above, you’ll notice that between the various fields there is one called the “GPU Clock”. And what is this? Now, if the GPU is a processor type, then works within a certain frequency, that is, a clock. In general, the clock is a sync signal. When the devices of the computer receive the signal to perform their activities, gives to this event the name “pulse clock”. In each pulse, the devices perform your task, stop and go to the next clock cycle.

The measurement of the clock is made in hertz (Hz), the standard unit of measures of frequency, which indicates the number of oscillations or cycles that occur within a particular measure of time, in this case, seconds. Thus, when a device works at 900 Hz, for example, means that is able to deal with 900 operations of clock cycles per second. Notice that, for practical purposes, the word kilohertz (KHz) is used to indicate 1000 Hz, as well as the term megahertz (MHz) is used to indicate 1000 KHz (or 1 million hertz). In the same way, gigahertz (GHz) is the name used when it has 1000 MHz, and so on. With this, if a GPU has, for example, a frequency 900 MHz, it means that you can work with 900 million cycles per second (this explanation was taken from this article about processors).

Thus, the greater the frequency of a GPU, the better it is his performance, at least theoretically, since this question depends on the combination of a number of factors, such as amount of memory and bus speed, for example. This indicates that clock is an important feature, however, the user does not need to worry so much with it, even because, in newer video cards, certain components can work with frequencies different from that used by the GPU in itself, such as the units responsible for the processing of shaders, for example.

Resolution and color

When you buy a video card, a important feature that is usually described in the specifications of the device is your resolution to maximum. When we talk about this aspect, we are referring to the set of pixels that form horizontal and vertical lines on the screen. Let’s take as an example a resolution of 1600×900. This value indicates that there is 1600 pixels horizontally and 900 pixels vertically, as exemplifies the image:

Of course, the higher the resolution supported, the greater the amount of information that can be displayed on the screen, since the video monitor is able to handle with the values supported by the video card. Within the limit of the maximum, the resolution can be changed by the user by means of specific features of the operating system, where also you can change the amount of the color with which the video card works.

For a long time, the combination of information regarding the resolutions, and color indicated the default used by the video card. Here are the most common standards:

MDA (Monochrome Display Adapter): a standard used in the the first PCs, indicating that the card was capable of displaying 80 columns with 25 lines of characters, supporting only two colors. Used in a time where computers were working, essentially, with lines command;

CGA (Color Graphics Adapter): default more advanced that the MDA and, therefore, more expensive, supporting usually the resolution up to 320×200 pixels (it may reach 640×200) with up to 4 colors at the same time between 16 available;

EGA (Enhanced Graphics Adapter): the standard used in the then revolutionary PC AT, supporting usually a resolution of 640×350 with 16 colors at the same time within 64 possible;

VGA (Video Graphics Adapter): a standard that has become widely known with the Windows 95 operating system, working with resolution 640×480 and 256 colors simultaneously, or 800×600 with 16 colors at the same time;

SVGA (Super VGA): seen as an evolution of the VGA, the SVGA initially indicated a resolution of 800×600 pixels and, later, 1024×768 resolution. In fact, from the SVGA, the plates video have passed support resolutions even more varied and millions of colors, therefore, is taken as the default current.

As regards the determination of the number of colors, this is established by the amount of bits destined for each pixel. The calculation consists of the following: make 2 raised to the amount bit. So, for 8 bits per pixel, 256 colors (2 raised to 8 is equal to 256). For 32-bit, has then to 4,294,967,296 color.

If you want more details, read this guide about resolutions, which includes HD, 4K and other standards not mentioned here.

GDDR – video-memory

Another item of extreme importance in a video card it is the memory. The speed and amount of this can influence significantly on the performance of the device. This type of component does not differ a lot of memories RAM that are typically used in PCs, including being relatively common to find cards that use memory chips technology DDR, DDR2 and DDR3. However, boards are more advanced and the current count with a type of memory specific to applications graphic: memories GDDR (Graphics Double Data Rate).

The memories GDDR are similar to the types of memory DDR, but are specified independently. Basically, what changes between these technologies are features such as the voltage and frequency. Until the closing of this article in the AbbreviationFinder it was possible to find five types of memory GDDR: GDDR1 (or just GDDR), GDDR2, GDDR3, GDDR4 and GDDR5.

Working with a voltage of 2.5 V and frequencies up to 500 MHz, the memory GDDR1 (which is pretty much the standard DDR) was up to that was quite used, but soon lost the preference for memories GDDR3 (at least on the boards more advanced). The type GDDR2 has had little use, being employed almost exclusively on the lines GeForce FX 5700 Ultra and GeForce FX 5800 NVIDIA, because while working with frequencies up to 500 MHz, its voltage is 2.5 V, resulting in a huge problem: excessive heat.

The memories GDDR3 have emerged as a solution to this question because they work with voltage of 1.8 V (and can in some cases to work also with 2 V’s) and combine this with more speed, as working with 4 data transfers per cycle clock (against two of the earlier standards). Their frequency,in general, it is 900 MHz, but can reach 1 GHz. Technically, are similar to the memories DDR2.

Memories GDDR4, in turn, are similar to DDR3, deal with 8 transfers per clock cycle, and utilize tension only 1.5 V. in addition, they employ technologies such as DBI (Data Bus Inversion) and Multi-Preamble to reduce the “delay”, that is, the delay time existing in the data transmission. However, its frequency remains, on average, in the house of 500 MHz, due to its “susceptibility” to the noise problems (interference). For this reason, this memory technology GDDR has acceptance low in the market.

In relation to the memories of GDDR5, this type supports frequencies that are similar and even slightly larger than the the rates used by the standard GDDR3, however, works with 8 transfers per clock cycle, increasing significantly your performance. In addition, it also has the technology as DBI and Multi-Preamble, not to mention the existence of mechanisms which offer better protection against errors.

The memories GDDR3 and GDDR5 are the most used in the market, the latter being commonly found on video cards the most advanced ATI.

And how much memory my video card must have? If you is asking this question, you know that, unlike the a lot of people think, not always more is better. This is because certain applications (games, mainly) can handle up to a certain amount of memory. After this, the memory the rest is not used.

The ideal is to look for cards that offer features compatible with the current needs, including in relation to the memory. At the time this article was written, it was quite common to find cards with capacities of 512 MB and 1 GB, in addition to models more advanced with this amount in 2 GB. In general, the more advanced is the GPU, more memory should be used not to compromise the performance.

But note that it is not of much value to have a reasonable amount memory and, at the same time, a memory bus low. Bus (bus), in this case, corresponds to the tracks communication which enable the transfer of data between the memory and the GPU. The larger the bus, the more data can be transferred at a time. Plates of low-cost use buses that allow you to transfer a maximum of 128 bits at a time. In closing this text, cards more sophisticated work with by less 256-bit, already being possible to find models “top line” with the bus of 512 bits.

On motherboards that come with graphics chip (onboard), video memory is, in fact, a part of the memory The RAM of the computer. In the great majority of cases, the user can choose the amount of memory available for this purpose in the BIOS setup. Some models of motherboards also include video memory built-in, but are less common.

To be able to make video cards cheaper, manufacturers also have launched models that, while not being integrated to the motherboard, use memory from the video itself and also part of the RAM memory of the machine. Two technologies for this are the TurboCache, NVIDIA and HyperMemory, ATI. If you care about the performance of your computer, you should avoid this type of device.

3D

In the current days, it is virtually impossible to talk about boards video without considering graphics 3D (graphics in three dimensions). This type of resource is essential for the entertainment industry, where movies and games in 3D are great success. Because of this, people want and need their computers can deal with this type of application.

The problem is that you do not just have a card that run resources 3D. It is necessary to know up to what point will that capacity, once the industry releases games and other applications that deal with 3D constantly and each time more refined, this all in the name of greater realism possible. It happens that, as more advanced are the images of an application, more graphic processing will be necessary. For this reason, in time to choose your video card, the user you should be aware of the characteristics of the device (clock, amount of memory, execution of shaders, among other).

It is also important to know some related concepts 3D processing:

– Fillrate: is the measure of the amount of pixels that the chip chart is able to render per second, being also called “pixel fillrate”. In other words, it is the measurement of the processing pixels. This is because, when a 3D image is generated, it must subsequently be transformed 2D so that it can be viewed on the screen, a task that gives by the transformation of the information in pixels. Note that there is also the “texel fillrate”that measures, also per second, the capacity of the video card apply textures;

– Frames per Second (FPS): as the name indicates, the FPS indicates the amount of frames per second that is displayed on the screen. When a movie is executed, for example, it is, in fact,composed of a sequence of images, as if they were several pictures in a row. Each of these “pictures” is a frame. As a rule, the greater the amount of frames per second, the better the user perception for the movements you see. If the FPS is too low, the user will have the impression that the images are giving the small “frozen” on the screen. The SPF is especially important in games, where slowness in the generation of the image can degrade the performance of the player. For games, the ideal is that the FPS is at least 30 FPS, so it is possible to obtain a minimum visual quality. Inhowever, many times the generation of images is a work so heavy that the number of FPS drops. In these cases, the user you can disable certain effects. With video cards powerful, it is possible to keep the FPS at a higher rate, without effects have to be disabled. Hence the fact a lot of people prefer boards that deal, for example, with 100 FPS, because when this rate falls, the amount available will still be satisfactory;

– V-Sync: one way to make the display of images more comfortable to human eyes is to turn on V-Sync. This is a feature which synchronizes the rate of FPS with the frequency update of the video monitor (refresh rate). This measure tells how many times per second the screen updates the display images. If it is 60 times, for example, your refresh rate is 60 Hz. The synchronization may cause the images to be displayed with the highest “naturalness”, as it helps avoid effects uncomfortable, as the tearing that usually occurs when the FPS is greater than the refresh rate of the monitor, causing a feeling “tear” in images that are quite busy;

– Antialiasing: this is a feature that is extremely important to improve the quality of the image to be displayed. Many times, because one of the limitations of the resolution of the monitor, objects 3D appear on the screen with the borders “blurry”, as if someone I had passed him a pair of scissors there, very rough. The filters antialiasing can mitigate this problem in a manner quite satisfactory,but it may require many processing resources;

– Anisotropic Filtering: also known by the initials AF, this feature allows to eliminate or mitigate the effect of “blurry image” existing textures, especially when these represent surfaces inclined. This feeling gets worse when the user closer view of the texture (as if you applied a “zoom”). The AF can reduce this problem to such an extent that the images not only become more clear as well have a better feeling of depth. The Anisotropic Filtering came in replacement of the Bilinear Filtering and Trilinear Filtering, which had the same purpose, but not had the same efficiency.

Note that, depending on the application (especially games), it is possible to enable, disable, and configure the use of these resources. A player can disable the antialiasing, for example, to make the game require less processing and eliminate crashes consequential of this.

DirectX and OpenGL

Do with that graphics applications can take advantage of all the power of GPUs is no easy task. At the time of the first PCs, for example, was until a feasible schedule features to directly access the resources of graphics chips, but with the passage of time and with the evolution of computing in 3D this task became increasingly onerous and complex. In view also, with the rise of the APIs(Application Programming Interface) targeted to graphics applications, which are, basically, sets of instructions “pre-finished” that allow to developers the creation of content chart faster and easier.

One of the first and the most important APIs geared to this end was the Glide, that has already fallen into disuse by the industry. Today, the market is based, essentially, on two APIs: DirectX and OpenGL.

DirectX, which is actually a set of APIs to applications audio and video belongs to Microsoft and is, therefore, it is widely used on Windows operating systems. As this platform is quite popular in the entire world, with numerous applications that use DirectX, especially games. Soon, nothing is more natural that the major manufacturers of GPUs they put graphics chips compatible with this technology.

At the time this article was finalized in AbbreviationFinder, the last version of DirectX was 11. Obviously, the more latest the version, but the features it offers. The problem is that not just count with the last version of the operating system the video card also needs to be compatible with she. Thus, if the user wants to, for example, to take advantage of all the features of a game that is compatible with the last version DirectX, you may have to replace a video card.

OpenGL, in turn, has purposes similar to DirectX, but with a big differential: it is an open technology and, therefore, available free for various platforms. With this, it is even more easy to create versions of the same application for different operating systems.

The advantage of being open is that OpenGL allows the development a large amount of applications and not limited in this work a single platform. But, although it is quite used today, the OpenGL bumps into a big problem: it has update slower and less innovative when compared to the DirectX, a fact that prevents it from being further employed in applications graphics, especially in newer games.

An interesting observation is that certain applications allow the user to choose between OpenGL and DirectX. Thus, it can be test both and use the one that present better performance. One of the programs that allow this alternation is the Google Earth for Windows. Another example is the simulator trains Trainz Simulator.

Buses

To do with the video card to communicate with the computer, it is necessary to use a technology standardized communication or, more precisely, buses. There are various technologies for this, some being unique to boards videos.

At the time of choosing a video card, it is important to check if the bus used by the device exists on the motherboard your computer, once that for each technology there is a slot, this is a different connector.

One of the first buses used was the ISA (Industry Standard Architecture), which emerged in the era of the IBM PC. Your the first version worked with 8 bits at a time and clock of 8.33 MHz, but soon came a 16-bit version that was able to transfer up to 8 MB of data per second. Technology ISA allowed not only the connection of video cards, but also a number of other components.

In the early 1990’s, came the bus the PCI (Peripheral Component Interconnect), whose capacity to deal with 32 bits per time and its clock of 33 MHz, resulting in the possibility of the permit fees transfers up to 132 MB per second (there were also a 64-bit version and clocked at 66 MHz, but that has been little used by industry). It is a standard now in disuse, although it is not difficult to find motherboards relatively recent that still support it. A wide variety of models of cards video used this technology. Such as the ISA, also it was used for other types of devices, such as modem cards and network adapters.

The fact is that the evolution of the GPUs is a constant and, soon, the PCI standard proved unable to cope with the amount data used by the graphics chips. The solution for this issue came in 1996, with the release of the bus AGP (Accelerated Graphics Port), created specifically for cards videos.

The first version of AGP works on 32-bit and with frequency of 66 MHz, resulting in a maximum transfer rate at 266 MB per second, and can be folded with the ability of the technology to allow transfer 2 data per clock cycle mode (2X). The latest version of the GPA, the 3.0, is able to work with up to 8X, resulting in transfer rates of up to to 2.133 MB per second.

Although it allows great advances, the GPA could not resist technology PCI Express, which stands out for being available in several ways, such as 1X, 2X, 8X and 16X. The devices that work with lower rates of data transfer can use the PCI Express 1X, for example, because your slot is a lot smaller.Cards video, however, work with PCI Express 16X, which allows you to rates transfer about 4 GB per second. With the PCI Express 2.0, introduced in 2007, this value can double.

Video connectors

The whole result of the work of a GPU will stop in a place, that obviously is the video monitor of the computer. For both, it is necessary to connect the latter to the video card. There are basically two standards used for this purpose: connectors VGA (Video Graphics Array) and DVI (Digital Video Interface).

The VGA, which connector, in fact, is called D-Sub, composite for a set of up to 15 pins. This is a fairly standard known, but which is increasingly in disuse. This is because connectors VGA was the standard on monitors of type CRT (Cathode Ray Tube), that lost space for the monitors the LCD (Liquid Crystal Display).

The problem is that CRT monitors need to work with the conversion digital signal/analog whereas LCD monitors work only with digital signals. Because of this, VGA connectors, which have been developed with a focus on CRT monitors, end up causing loss of quality image when used on monitor LCD. The solution was the creation of a standard, all-digital, DVI.

The industry was then put on the market boards video that offer both VGA connection and DVI. The models more recent, however, they only work with the latter. Cards the most current work including connections HDMI.

SLI and CrossFire

If a video card does not provides the expected performance for an application, do the two (or more)cards work together? That is exactly the goal of the technologies SLI and CrossFire. The first, whose acronym initially stood for Scan-Line Interleave, it was created by the company 3Dfx, which essentially divided the lines of image so that each graphics chip to stay responsible by processing a half. The 3Dfx was bought out later by the NVIDIA, then SLI technology came to be associated with this last and has its meaning changed for Scalable Link Interface. The CrossFire, for its time, is a technology implemented by ATI.

To use these technologies, it is necessary that the motherboard has two slots equal to the interconnection of two cards of videos. In most cases, it is necessary to make use of a device in the format of cable or connector called a “bridge”that interconnects the two plates that work together and that can accompany the mother board, or be purchased separately. So general, it is necessary to use plates that are the same (or at least with the same specifications) for the use of these technologies, although some flexibility is possible, especially with the CrossFire.

Note that to use SLI or CrossFire in your computer requires a motherboard appropriate, and two video cards, increasing the expenses with the equipment. Thus, it is convenient to do a lot of research to see if it’s worth it use of these technologies. If you consider that yes, it is recommended look for video cards that are appropriate for this. In some cases, maybe it is more interesting to the user to acquire cards that contain two GPUs, even though they are expensive and difficult to find,especially in Brazil.

Electrical power

Many times, when you carry out the exchange of a video card for a more new, the user does not realize that it may be necessary to change also the power supply of the computer. This does not always happen, but when it occurs, the user realizes this need when the machine shuts down or restarts alone, for example, indicating, among other possibilities, that the video card is consuming more energy than the source is able to provide. This has a reason: the video cards are one of the components that the most energy-consuming on a computer.

To meet this need, some video cards come with connectors that allow the fitting of power cables of hard Drives, not seldom left on a computer. Thus, it is can you get more electricity when the power provided by means of the slot is not enough. Power supplies more current, however, offer specific cables for the supply power to connected devices in PCI Express slots. The connectors these cables are usually formed by 6-pin, although also it is possible to find versions with 8. In some cases, this type of cable must be connected to a connector on the motherboard that it is close to the PCI Express slot, but in most cases this connection is done directly on the video card.

Because of this, it is extremely important to verify if the motherboard and if the computer power supply come with the resources required for the video card chosen before make the purchase. Depending on the case, it will be necessary to make use of adapters, or even make the exchange of some component.

Ending

Based on the information provided on this page, you will be able to evaluate the characteristics essential for a video card before you purchase it. You you will notice that more advanced models offer more memory, GPU clocked higher, the higher amount of stream processors, among other. But of course, the more sophisticated the device, the greater is the price. So, you must also take into account what your needs in relation to the video card.

If you want to just run videos and casual games that does not require a lot of processing, a plate of low cost will be enough. But if you want a PC capable of running the latest released games, no way, the more advanced is your video card, the better. Fortunately, most of the titles allows you to disable certain visual features. With this, it becomes possible to run them even in intermediate cards.

Before closing, a “curiosity important”: in a certain way, CPUs and GPUs are devices are quite similar, but as these the last deal constantly with images in 3D, are more prepared to deal with parallel processing. It turns out that this ability it is not useful only in games or in generation of 3D graphics. There are a number of other applications who can benefit from it.

The industry has created specific technologies to facilitate the use of GPUs more recent in activities not directly related the graphic processing. Two of them are the OpenCL and the CUDA. The first it is linked to the entity that maintains the OpenGL standard. The second was developed by NVIDIA to work with their chips and stands out for allowing the use of instructions in the language of C, although adaptations also allow the use of Java, Python and other. According to NVIDIA, the technology has been used the scientific research and analysis tools on the market financial, for example.

You can learn more about the subject by visiting the website gpgpu.org or searching by the term “GPGPU” (General-Purpose GPU).